Already at part 4 of setting up “The LAB” (it’s going to be a long run), but trying to document this in a good way for myself and in the same time share my experience in setting this up.

1. Storage

The cluster has been configured, passwords changed, now let’s have a look at the storage. There are 3 components that make up the storage:

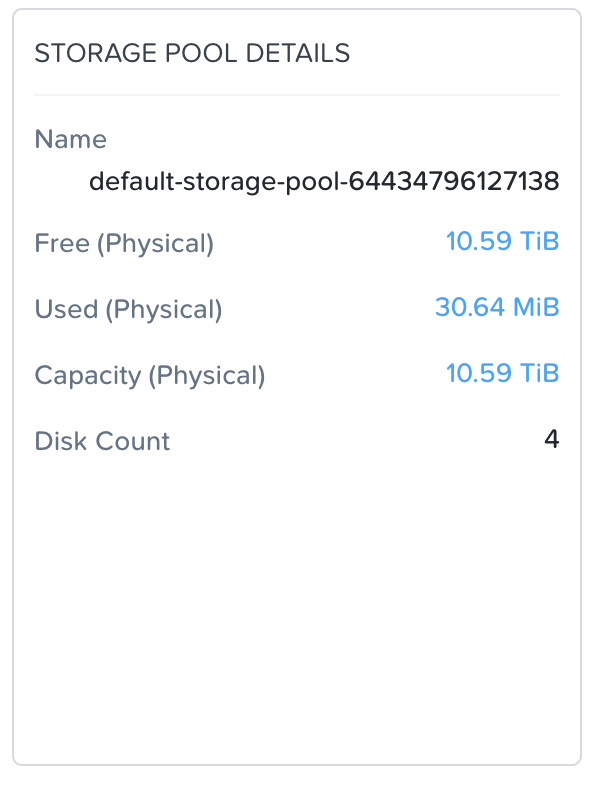

- Storage Pool

- Volume Group

- Storage Container

A storage pool consist of a group of physical disks (1 SSD & 3 HDD). It is recommended to have one storage pool per cluster to optimize the distribution of resources like capacity and IOPS. So we leave this one alone as this is configured as recommended. As the storage pool is not of any importance in the whole scheme of things, I will not rename/delete it, but just keep as-is. As you can see I have 10.59 TiB available.

A volume group is a collection of logically related virtual disks. A volume group is attached to VM either directly or using iSCSI. Currently I will not make any changes here as there is no requirement yet.

A storage container is a subset of available storage within a storage pool and are created within a storage pool to hold virtual disks (vDisks) used by virtual machines. By default a container is thin provisioned (meaning it will assign physical storage to the container as needed). Currently there are 3 default containers:

- NutanixManagementShare

- SelfServiceContainer

- default-container-64434796127138

The NutanixManagementShare container is a built-in container to be used for Nutanix Files and to support the Self-Service Portal features and should NOT be deleted, even if you don’t use this.

About the same is applicable for the SelfServiceContainer that is used by Prism Self Service for storage and other feature operations, so I will NOT delete this container either.

The last container is the default container created with a naming convention that doesn’t help a lot. So this one is getting deleted!

After deleting the default container of course I will create a new container using a proper naming convention and with the right settings. The name will be using the same logic as described in post “The LAB (Part 3)” with the following result: NLSOMCTR01 (Where CTR stands for Container).

There are several settings that can be defined on the storage container, but for The LAB I will use the default settings as there is no need for me to change this.

- Redundancy Factor: This can’t be changed as I have only one node.

- Reserved Capacity: This will guarantee the amount of storage that is reserved for the container. Not needed for my environment

- Advertised Capacity: What is seen by the host? Not interested

- Compression: Data is compressed after it is written, will be using the recommended delay of 60 minutes.

- Deduplication: This can be enabled on cache only or also on capacity. I will use mixed workloads (not sure what actually) and I need to add RAM to my CVM, so no go.

- Erasure Coding: this needs at least 4 nodes, so again a no go.

After hitting the Save button the container is created.

2. Host/CVM name

My OCD kicked in and I noticed I still had the default hostname and CVM-name in place. In a production environment I would advise to keep the CVM name (as it normally contains the serial# of the block, which helps if you need to check anything on the Nutanix Support portal), but in my lab environment I change this to something familiar. Luckily this is described very well in the AHV Administration guide, so I will follow this. Connect with SSH to the host:

ssh root@192.168.2.14

vi /etc/sysconfig/network

I replaced the hostname with my preferred hostname NLSOMVH01 (Netherlands, Someren, Virtual Host 01) and saved the file.

Logon to the CVM using SSH

ssh nutanix@192.168.2.5

Restart the Acropolis service so the change will be refelected in Prism.

genesis stop acropolis; cluster start

As I’m connected to the CVM I can now change the CVM hostname also. This can be done using the change_cvm_hostname command.

sudo /usr/local/nutanix/cluster/bin/change_cvm_hostname NTNX-NLSOMVH01-CVM

As you can see I rename the host to start with NTNX- and end with -CVM as recommended by Nutanix to avoid any issues that depend on identifying the CVM by using the naming structure as described in Nutanix KB5226.

Looks simple enough, but … when logging on to the Prism interface the name hasn’t changed as shown below.

I’m not the only who runs into this issue as there is a thread (login required) on this in the Nutanix Community forum. Thanks to pjdlee & Tyler Bogdan I can rename the CVM so it will properly display in Prism.

DISCLAIMER: Don’t do this in any production system!!! Always engage Nutanix Support if this is needed in your production environment.

There is no data on my lab so I cannot loose anything important. Do this at your own risk, I will not be liable for anything that you might break/loose by stupidly copying below commands.

When connected via ssh to the host I run the following commands:

visrh list -all virsh dumpxml NTNX-c49478e7-A-CVM > NTNX-NLSOMVH01-CVM.xml vi NTNX-NLSOMVH01-CVM.xml

in the xml file I replaced the name with the new name NTNX-NLSOMVH01-CVM

Save the file and run the following commands to update the CVM name:

virsh shutdown NTNX-c49478e7-A-CVM virsh undefine NTNX-c49478e7-A-CVM virsh define NTNX-NLSOMVH01-CVM.xml virsh start NTNX-NLSOMVH01-CVM virsh autostart NTNX-NLSOMVH01-CVM

After the CVM and cluster is started again, I needed to check if this was succesful, so immediately logged on to the prism console and whooohoo, the CVM name is correctly displayed:

So now the host is remaining. As AHV is CentOS based I will use the default Linux commands to check the current hostname and rename this to my preferred one. To apply the new hostname a reboot is required.

hostname -s hostnamectl set-hostname NLSOMVH01 shutdown -r

So checking the Prism Console again and finally my OCD is satisfied, CVM- and hostname are set to my preferences and nicely displayed.

I promised in my last post that I would deal with Prism Central and some other stuff, but you have to check this in the next episode, as this one is long enough again…